Let us begin with something simple and familiar: a cake—a staple at celebrations and gatherings of all kinds. It may seem like an unlikely starting point, but bear with me; this everyday example will serve to illustrate a much deeper concept shortly.

A cake recipe might include:

- One billion quadrillion (1 followed by 32 zeros) particles of wheat flour.

- ½ cup of butter

- 1½ cups of refined sugar

- 1 cup of milk

- 3½ teaspoons of yeast

- 1 teaspoon of salt

- 1 teaspoon of vanilla extract

- 3 eggs

The preparation is straightforward:

- Preheat the oven to 180°c (350°f) and grease a 23 x 33 cm baking pan. Mix the salt and yeast into the flour and set aside.

- Cream the butter and sugar in a large bowl until fluffy. Add the eggs one by one, mixing well after each.

- Alternate adding the flour mixture and the milk, beating until smooth. Stir in the vanilla.

- Pour into the pan and bake for 45 minutes.

Now, imagine you could count every single flour particle. Here is the question: if you accidentally added one extra particle, or left one out, would that microscopic error ruin the entire cake? Would it collapse in the oven or become inedible?

Of course not. A cake is forgiving. Tiny deviations in quantity do not alter the result in any meaningful way.

And yet, as we will soon see, when it comes to the formation of the universe, the situation is very different. In that case, an error as small as a single figurative “flour particle” could have made all of existence impossible. The cake is just an analogy—but it prepares us to understand the astonishing precision required at the dawn of the cosmos.

Let us explore that in more detail.

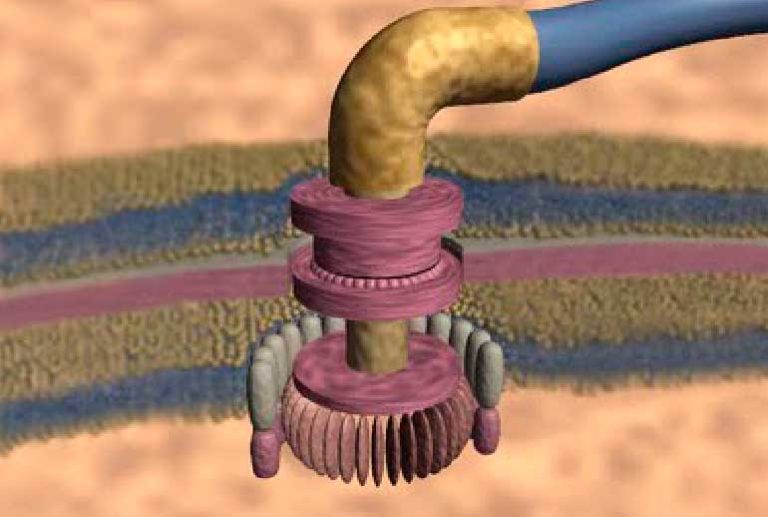

When you hear the word “atom,” you might immediately picture a cluster of spheres at the center, with smaller spheres orbiting around them in concentric circles. If so, you are visualizing the Bohr model of the atom, proposed in 1913 by Danish physicist Niels Bohr[1].

According to this model, the atom consists of a dense central nucleus made up of protons and neutrons, with electrons revolving around it in distinct energy levels or “shells.” In this structure:

- Protons carry a positive charge.

- Electrons carry a negative charge.

- Neutrons are electrically neutral.

Bohr’s model was groundbreaking at the time, offering a simple and intuitive representation of atomic structure. While later developments in quantum mechanics would refine and expand our understanding, this iconic image of orbiting electrons still shapes how many people imagine the atom today.

The concept of the atom—a fundamental, indivisible unit of matter—dates to ancient Greece, where it arose more from philosophical reasoning than empirical science. Thinkers like Democritus proposed that all matter was composed of tiny, indivisible particles called atoms, not based on experiments, but as a logical necessity to explain the nature of change and continuity in the physical world.

It was not until many centuries later, in the early 19th century, that the idea began to take on scientific form. In 1804, John Dalton proposed that all atoms of a given element are identical in mass and properties, and distinct from the atoms of any other element[2]. This marked a pivotal moment in atomic theory, initiating the systematic study of chemical behavior at the atomic level.

As knowledge progressed, scientists began cataloging elements based on their atomic properties. In 1869[3], Dmitri Mendeleev published the first organized inventory of elements arranged by atomic mass—the forerunner of today’s periodic table. Mendeleev’s table not only organized known elements but also predicted the existence and properties of undiscovered ones with remarkable accuracy.

The next major step in atomic theory was an attempt to visualize the structure of the atom. This gave rise to various atomic models, each attempting to explain observed chemical and physical phenomena. Among the most influential was Niels Bohr’s model (1913), which depicted electrons orbiting a central nucleus in defined paths or shells, laying the foundation for quantum theory.

These evolving models represent a crucial shift from philosophy to science—transforming the atom from an abstract idea into a central pillar of modern chemistry and physics.

Each new scientific discovery about the atom opened the door to even more unanswered questions—many of which remain unresolved to this day. Among the most pressing mysteries that intrigued early physicists was a fundamental one: What gives the atom its stability?

Why do protons and neutrons, with their considerable mass, bind together in the nucleus instead of drifting apart? What force causes them to coalesce and stay compacted at the center of the atom? Similarly, how does the electron manage to orbit the nucleus indefinitely, neither spiraling inward toward the proton nor flying off into space?

This becomes even more perplexing when we consider electromagnetism, one of the most well-understood laws of physics. According to it, like charges repel, and opposite charges attract. If that is the case, then:

- How can multiple positively charged protons coexist in the nucleus without repelling each other violently?

- And if the electron carries a negative charge while the proton is positive, why don’t they simply collapse into each other under the force of attraction?

These questions revealed that something beyond electromagnetism must be at work—something strong enough to counteract the repulsive forces within the nucleus and delicate enough to keep electrons in dynamic balance at a precise distance.

Physicists eventually proposed the existence of the strong nuclear force to explain this—but even this “solution” only raised more questions about the fine-tuned nature of physical constants and the underlying principles that govern matter. The more we uncover about atomic structure, the more we are confronted not just with complexity, but with a remarkable precision that seems anything but accidental.

In the 20th century, scientists discovered a force operating within the heart of the atom—the strong nuclear force[4]. This force is far more powerful[5] than electromagnetism, and it plays a vital role in atomic stability. Without it, protons, which all carry positive electric charges, would naturally repel one another and fly apart. But the strong nuclear force overcomes this repulsion, binding protons, and neutrons together within the nucleus and allowing atoms to exist.

But what if this force were altered—even slightly?

- If the strong nuclear force were eliminated, protons would no longer be held together. The nucleus would disintegrate, and atoms would cease to exist entirely. No atoms mean no matter—no stars, no planets, no life.

- If the force were slightly stronger, it would overpower the balance between the nucleus and the orbiting electrons. The electron could be pulled into the nucleus, merging with the protons and destroying the atom’s structure. Again, atoms would not exist.

- Conversely, if the strong nuclear force were slightly weaker, it would no longer be able to hold the protons together. The electromagnetic force—which pushes like charges apart—would dominate, causing the nucleus to fly apart. Atoms would collapse before forming.

Without atoms, there can be no molecules. Without molecules, chemistry cannot occur. And without chemistry, there would be no stars, no planets, no life, and no universe as we know it.

In short, the strong nuclear force must have precisely the right value—not too strong, and not too weak—to ensure the stability of the atom. Its delicate balance is one of the most striking examples of fine-tuning in the universe. The existence of matter itself hinges on a force that is, quite literally, just right.

Gravity, also known as gravitation, is one of the four fundamental forces of nature. It is the force that draws two objects with mass toward one another. Though it is vastly weaker than the strong nuclear force, it plays an essential role in the formation and structure of the universe.

After the Big Bang, the universe was composed almost entirely of hydrogen atoms—the simplest atoms, each made of just one proton, neutron, and electron. Despite gravity’s comparative weakness, it was just strong enough to begin pulling nearby hydrogen atoms toward one another. As atoms gathered, they formed clumps of matter. These clumps had more mass, which in turn generated more gravitational pull, allowing them to attract atoms that were farther away. Over millions of years, these growing masses formed massive gas clouds that eventually collapsed under their own gravity, igniting nuclear fusion and giving birth to stars.

When massive stars exhaust their fuel, they explode in a supernova, scattering newly formed elements—everything from carbon to uranium—across space. These elements coalesce again under the influence of gravity, forming planets, moons, and rocky worlds like our own. Smaller stars, like our Sun, do not explode but eventually burn out and settle into a dense, inert state—an eventual fate for our solar system (see Appendix C).

As this illustrates, gravity is the architect of the cosmos—the force responsible for the existence of stars, planets, and life itself. But what if gravity had been just a tiny bit different?

- If gravity were slightly weaker, atoms would never have clumped together. No stars, no planets, and no chemistry would have formed.

- If gravity were slightly stronger, atoms would have collapsed into a single dense mass shortly after the Big Bang. Again, no stars or planets—just one massive, lifeless object.

But how slight is “slight”?

To understand this, let us grasp the scale of measurement:

- A centimeter is one hundredth of a meter.

- A millimeter is one tenth of a centimeter.

- A nanometer is one millionth of a millimeter.

- A yoctometer is one septillionth of a meter: 1 meter ÷ 1,000,000,000,000,000,000,000,000.

Now, here is the astonishing part: The gravitational constant—the value used in the formula that calculates the force of gravity[6]—must be so precise that even a change as small as one part in a yoctometer would make the universe uninhabitable.

- If that constant were just slightly smaller, gravity would be too weak to form stars and galaxies.

- If it were just slightly larger, matter would collapse too quickly into singularities before anything could form.

Only one incredibly narrow range of values allows for a universe that can support complex structures—and life.

This same principle applies to the strong nuclear force. Though its range is even smaller—less than a billionth of a millimeter—its strength must also be finely tuned. If it were changed by just a billionth of a yoctometer, atomic nuclei could not form and matter itself would not exist.

That is how delicate the balance is. When we use the word “slightly” in the context of the physical constants of the universe, we are referring to changes so minuscule that they stretch the limits of comprehension. Yet those infinitesimal differences determine whether the universe exists—or collapses into nothingness.

So, we must ask:

Is this extraordinary precision the result of random chance? Or does it point to something more—a purposeful design? Coincidence? Luck? Or something greater?

As of the time of writing, scientists have identified at least ninety-three known forces, constants, proportions, velocities, and distances that govern the formation and preservation of all matters in the universe. Each of these values is set with extraordinary precision. It is precisely because of their current, exact values that the universe is stable, structured, and capable of supporting life. Even the slightest deviation in any one of these constants would disrupt the behavior of matter and render the universe uninhabitable.

This raises a fundamental question: Could chance have produced the exact values necessary for everything we observe to exist? The probabilities involved are not merely improbable—they are astronomically implausible.

Take, for instance, the gravitational constant, which determines the strength of gravity. According to physicists, only one value in 10²⁷⁹ possible options would result in a universe capable of forming stable atoms—the foundational building blocks of matter and life (see Appendix B). In other words, the probability that gravity alone would have the right value by chance is 1 in 10²⁷⁹.

Or consider the cosmological constant, which governs the rate of expansion of the universe. For the universe to expand at just the right rate—not too quickly to prevent matter from clumping together, and not too slowly to cause it to collapse—only one in 10⁵⁷ possible values will suffice.

To illustrate this staggering improbability, astrophysicist Trinh Xuan Thuan[7], in his book Le Chaos et l’Harmonie, offers a memorable analogy:

That number is so small that it corresponds to the probability that an archer would hit a 1 cm² target located at the other end of the universe, blindly shooting a single arrow from Earth and not knowing in which direction the target is.

Even more, when it comes to the strong nuclear force, physicists John Barrow and Frank Tipler[8] estimate that the probability of it having the precise value it does is 1 in 10³². Now, if we calculate the joint probability that just these three fundamental forces—gravity, the cosmological constant, and the strong nuclear force—simultaneously possess the exact values needed for life to exist, the combined probability is 1 in 10³⁶⁸.

And keep in mind—that is just three out of the ninety-three known constants. We have not even accounted for the remaining ninety variables.

To put this into perspective: one of the most well-known lotteries in the world, the Powerball in the United States, requires matching five numbers out of sixty-nine plus one “Powerball” out of twenty-six. The odds of winning? 1 in 292,201,338, or roughly 1 in 2.92 x 10⁸—a probability we consider extremely remote.

But compared to the odds of 1 in 10³⁶⁸, the Powerball jackpot begins to look almost guaranteed.

To attribute the precise calibration of the universe’s physical constants to random chance is to suggest a coincidence so vast, so wildly improbable, that it borders on the mathematically absurd. Far from a rational explanation, it becomes a leap of blind faith—one that ignores the overwhelming evidence of fine-tuning at the heart of the cosmos.

Matter and the forces that govern it appear to have been designed from the very beginning with precisely defined properties. This extraordinary precision suggests that the universe followed a blueprint—a design laid out by a Designer. To claim that such exact calibration arose purely by chance is not merely speculative—it would be the greatest leap of blind faith one could make.

As scientists began uncovering the extraordinary fine-tuning of the universe—forces, constants, ratios, velocities, and distances—many in the believing community found their convictions reinforced by nothing less than science itself. The smallest variation in these values would render the universe impossible. Such facts point convincingly to the existence of a superior intelligence—a Creator—who determined the physical laws with such harmony and precision that the formation of matter, stars, planets, and life became possible.

These revelations leave no room for randomness. The equation that governs our cosmos carries not the fingerprints of chaos, but the signature of purpose.

Even the world-renowned physicist Stephen Hawking[9], not known for endorsing theism, acknowledged the mystery. In his 1988 classic, A Brief History of Time, he wrote:

The laws of science, as we know them at present, contain many fundamental numbers, like the size of the electric charge of the electron and the ratio of the masses of the proton and the electron… The remarkable fact is that the values of these numbers seem to have been finely adjusted to make possible the development of life.

Similarly, Fred Hoyle[10], the esteemed British mathematician, physicist, and astronomer—himself an agnostic—confessed:

A commonsense interpretation of the facts suggests that a super intellect has monkeyed with physics, as well as with chemistry and biology, and that there are no blind forces worth speaking about in nature. The numbers one calculates from the facts seem to me so overwhelming as to put this conclusion beyond question.

Faced with such compelling implications, many atheist academics responded swiftly. Enter the theory of the multiverse[11]. Borrowed from the fringes of science fiction, this idea proposes that our universe is just one of trillions generated every second in a hypothetical “universe factory.” Each of these universes supposedly has different laws, constants, and parameters. Most are failures—disintegrating instantly due to unstable conditions—but by sheer statistical chance, ours happens to have the right values for life.

And what evidence supports the existence of this cosmic factory? None. Not even within the most speculative boundaries of science fiction did the concept hold such elevated status as it does now in some academic circles.

But here is the deeper issue: even if such a “factory” existed, it does not solve the problem—it only pushes it back a step. Where did the factory come from? What forces and constants allowed it to operate? What raw materials did it use? What intelligence programmed it to test different values and produce functioning universes?

In the past, the origin of the universe was traced back to a single, mysterious “ball” of energy from which the Big Bang erupted. For believers, this origin was attributed to a Creator, who encoded the necessary laws and properties into matter. Nonbelievers, on the other hand, claimed this initial state had simply always existed, and that chance was responsible for everything that followed.

However, the overwhelming fine-tuning we observe today forced a shift: chance could no longer explain the current state of the universe. Thus, the multiverse theory was born—not from observation, but from the need to preserve a worldview without design.

Ironically, this new theory leads to the same kind of puzzle. The question once was: “Where did matter come from?” Now, it is: “Where did the factory come from?” And if even one universe strains our understanding, how much more incomprehensible would a mechanism capable of producing infinite universes be?

Once again, there is no answer.

[1]Niels Bohr was awarded the Nobel Prize in Physics in 1922. He was born in Copenhagen, Denmark, in 1885 and died there in 1962. Bohr contributed to the Manhattan Project, participating in the development of the first atomic bomb in the United States. Throughout his career, he frequently engaged in intellectual debates with Albert Einstein, particularly on the interpretation of quantum mechanics.

[2]This was a postulate of the English chemist, physicist, and mathematician John Dalton (1766–1844).

[3]This was the work of Russian chemist Dmitri Ivanovich Mendeleev (1834–1907).

[4]This is one of the four fundamental forces acting between subatomic particles. The other three are the electromagnetic force, the weak nuclear force, and the gravitational force.

[5]The strong nuclear force is approximately 137 times stronger than the electromagnetic force acting between protons.

[6]The gravitational force between two masses is described by the formula F = (G × m₁ × m₂) / d², where m₁ and m₂ represent the masses of the two objects in kilograms, d² is the square of the distance between them in meters, and G is the universal gravitational constant.

[7]Trinh Xuan Thuan (born August 20, 1948, in Hanoi) is a Vietnamese-American astrophysicist and author who writes in French. He was awarded the unesco Kalinga Prize in 2009 and the Cino Del Duca World Prize in 2012. Among his notable works is the book Le Chaos et l’Harmonie, in which he explores the numerical basis for the possible values of the cosmological constant related to the universe’s rate of expansion.

[8] The Anthropic Cosmological Principle.

[9] Stephen William Hawking (1942–2018) was a British theoretical physicist, cosmologist, and science communicator, renowned for his work on the origins and structure of the universe, particularly in the fields of black holes and cosmology. He was also known for engaging in discussions on the relationship between science and religion, including arguments against the necessity of a divine creator based on scientific reasoning.

[10]Fred Hoyle was responsible for one of the most significant discoveries of the 20th century: carbon nucleosynthesis. He was an active member of both the Royal Society and the American Academy of Arts and Sciences. Hoyle passed away in 2001.

[11]You can watch world-renowned naturalist Richard Dawkins explain this theory in the following video: https://www.youtube.com/watch?v=oO0QRUX4HGE